If you want to write a python dictionary to a JSON file in S3 then you can use the code examples below.

There are two code examples doing the same thing below because boto3 provides a client method and a resource method to edit and access AWS S3.

- Example: boto3 client

- Example: boto3 resource

- Converting a Dictionary to JSON String

- Lambda Function writing Dictionary to JSON S3 objects

Related: Reading a JSON file in S3 and store it in a Dictionary using boto3 and Python

Writing Python Dictionary to an S3 Object using boto3 Client

import boto3

import json

from datetime import date

data_dict = {

'Name': 'Daikon Retek',

'Birthdate': date(2000, 4, 7),

'Subjects': ['Math', 'Science', 'History']

}

# Convert Dictionary to JSON String

data_string = json.dumps(data_dict, indent=2, default=str)

# Upload JSON String to an S3 Object

client = boto3.client('s3')

client.put_object(

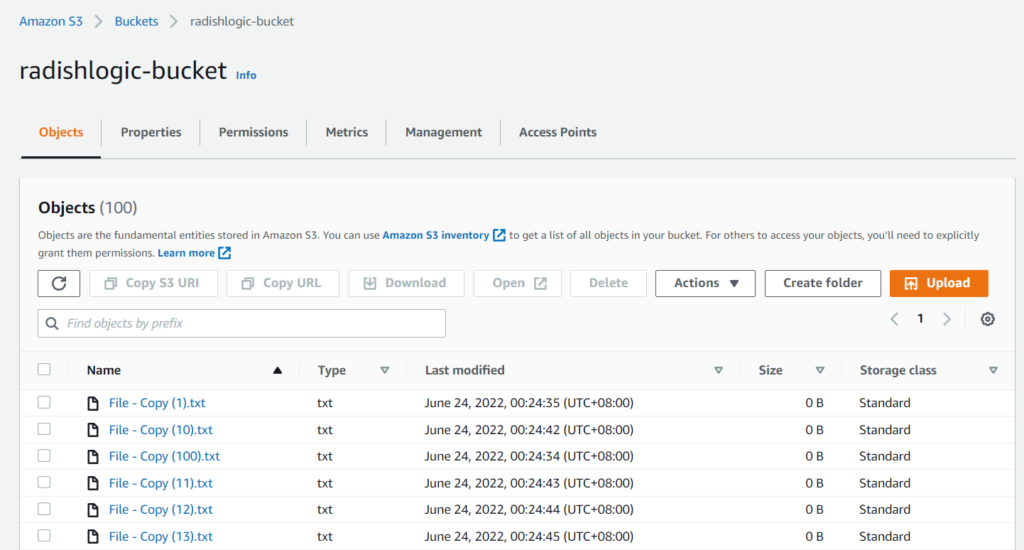

Bucket='radishlogic-bucket',

Key='s3_folder/client_data.json',

Body=data_string

)